Article Contents

Overview

Large up.time deployments may achieve performance benefits by transferring up.time reporting functions to a dedicated reporting instance that only generates and serves reports.

System monitoring involves intensive insertion of data into the up.time DataStore and running reports over long date ranges and many systems can require a large number of DataStore requests. Most of the larger up.time deployments where this becomes a concern are already using an external database like SQLServer or Oracle, which provides plenty of capacity within the database. So by distributing uptime’s activity across two instances, we’re able to use more of this capacity then we could with a single instance.

If you are considering deploying an up.time reporting instance in your environment, please contact support beforehand. So that we can help assess if a remote reporting instance will help in your environment.

Reporting Instance Architecture

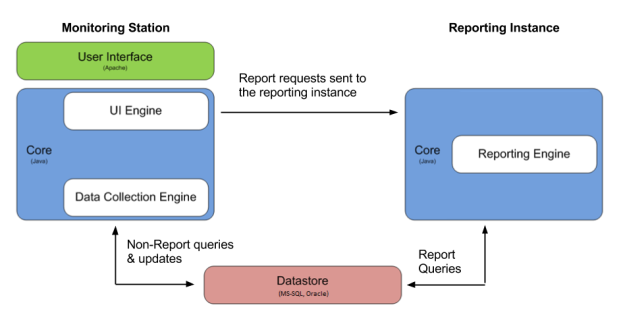

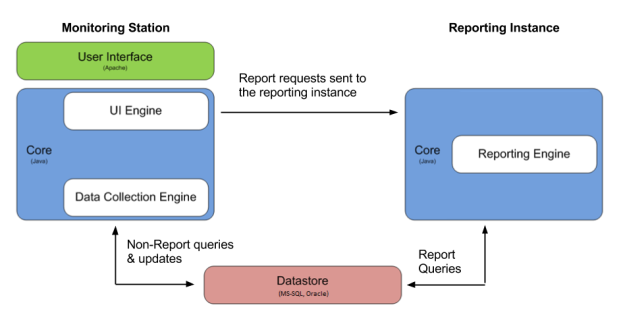

An up.time deployment with a reporting instance has 2 separate up.time installations:

Both installations will connect to the same database server.

The following graphic illustrates the relationship between the Monitoring Station and reporting instance:

|

Step 1 - Install the Reporting Instance

This step assumes that the Monitoring Station is already installed and collecting data. The reporting instance will be installed as a new copy of up.time on a separate server.

Before installing the reporting instance, ensure that the server that will host the reporting instance supports the minimum hardware and operating system requirements for an up.time Monitoring Station. For more information on sizing and installing a Monitoring Station, see Recommended Monitoring Station Hardware.

| The operating systems of the two hosts can be different. However, the up.time version must be the same for both the Monitoring Station and the Reporting instance. |

Step 2 - Configure the Reporting Instance Settings

To enable a separate up.time reporting instance, configuration settings must be changed on the Monitoring Station and additional configuration options must be added to the uptime.conf file on the reporting instance.

On the reporting instance, add the following entry to the uptime.conf file to disable all non-reporting functions:

reportingInstance=true |

We also need to scale down the size of the connectionPoolMaximum in the uptime.conf from the default option of 100 to 50:

connectionPoolMaximum =50 |

Also refer to the Mail Server settings on your Monitoring Station and copy the settings into the uptime.conf file on the reporting instance to enable it to send reports via email:

smtpServer=mail.yourserver.com smtpPort=25 smtpUser=username smtpPassword=password smtpSender="uptime Monitoring Station" smtpHeloString=yourserver.com |

In the smtpSender entry, you can change uptime Monitoring Station to another value such as up.time Reports.

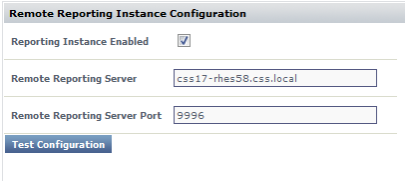

Login to the web interface of the Monitoring Station. In the navbar, click Config and then click Remote Reporting in the left side menu. Click the Edit Configuration button to open the following window:

Edit the following fields:

Step 3 - Verify the Monitoring Station can Access the Reporting Instance

After you have set up the Monitoring Station and reporting instance, verify that the two instances can communicate with each other:

A pop-up window will appear. If the reporting instance was configured properly, a message similar to Reporting is running on report_host:9996 will be displayed in the pop-up window. If an error message appears, check the configuration and try again.

Step 4 - Published Reports Folder changes

Now that the remote reporting instance has been successfully setup, and we’ve confirmed that the Monitoring Station is able to push report generation requests to the remote server. We need to make changes to accommodate the change in the ‘Published Reports’ folder. As mentioned in the Architecture section above, all published reports are now saved on the reporting server, instead of locally on the Monitoring Station like before.

That means we have two options for howto handle this change:

myportal.custom.tab2.enabled=true myportal.custom.tab2.name=Published Reports myportal.custom.tab2.URL=http://remote-reports-server:9999/published/ |

Additional Steps using the Bundled MySQL Datastore with Remote Reporting

The above steps for setting up a remote reporting instance were tailored for environments where the up.time datastore is running in an external database like Oracle or SQLServer. It is possible to setup a remote reporting instance that connects to the bundled mysql datastore service on the monitoring. The potential performance boost won’t be as large as compared to using an external database. The reason for this that the bundled mysql datastore still runs on the Monitoring Station, and shares resources with the main uptime instance.

Using an external database means that there is already a database user available with Read/Write access, as well as a larger database connection pool available. So in order to use the bundled mysql datastore with the reporting instance, we’ll need to take some additional steps to account for this.

Granting the uptime database user external access:

Run the following command to connect to the uptime datastore as the root user:

mysql -uroot -puptimerocks |

Once connected to the database run the below grant statement. This statement will allow the uptime user to connect to the database from any external host, so for additional security you can replace the % symbol with the IP address or hostname of the reporting instance

grant all privileges on uptime.* to "uptime"@"%" identified by "uptime"; |

Increasing the max_connections on the bundled mysql instance:

Find the below line "max_connections=110" in the file and change it to:

max_connections=160 |